What is Cache Memory? How it Reduces the Mismatch of Processor and Main Memory Speed

Introduction

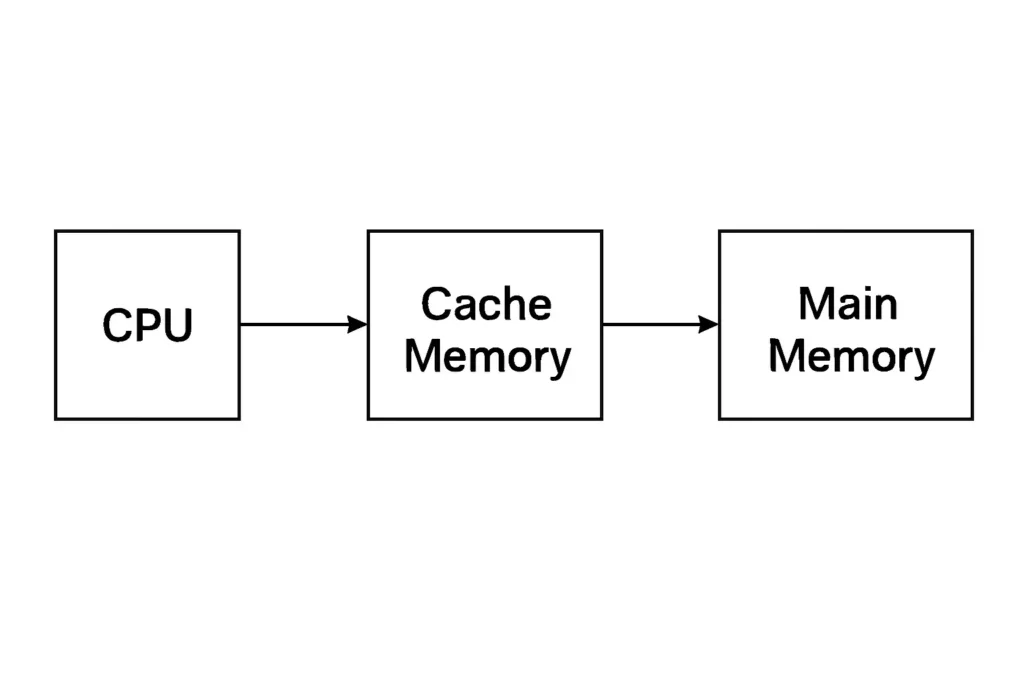

In computer architecture, cache memory is a small-sized, high-speed memory located close to the central processing unit (CPU). It temporarily stores data and instructions that are frequently accessed, reducing the time taken by the CPU to retrieve this information from the main memory (RAM).

Definition of Cache Memory

Cache memory is defined as a high-speed storage area that holds frequently used data and instructions. It acts as a buffer between the CPU and RAM, improving processing speed and overall system performance.

Need for Cache Memory

Modern processors are extremely fast, while RAM is relatively slower. This speed difference creates a performance bottleneck, as the CPU has to wait for data retrieval from RAM. Cache memory helps by storing frequently used data close to the processor, reducing data access time.

How Cache Memory Reduces the Mismatch

The speed mismatch problem is resolved in the following way:

- When the CPU needs data, it first checks the cache memory.

- If the data is present (cache hit), it is accessed quickly.

- If not (cache miss), the data is fetched from main memory and stored in the cache for future use.

This mechanism minimizes delays and keeps the processor busy with useful instructions, rather than waiting.

Levels of Cache Memory

Cache memory is generally divided into three levels:

1. L1 Cache (Level 1):

- Closest to CPU core

- Smallest in size (16KB to 128KB)

- Fastest

2. L2 Cache (Level 2):

- Larger than L1 (128KB to 1MB)

- Slightly slower

- Stores recently used data

3. L3 Cache (Level 3):

- Shared between multiple cores

- Larger in size (2MB to 50MB)

- Slower than L1 and L2

Types of Cache Memory

| Type | Description |

|---|---|

| Instruction Cache | Stores frequently used instructions |

| Data Cache | Stores frequently used data |

| Unified Cache | Stores both instructions and data |

Working Principle of Cache Memory

- CPU requests data

- Cache is checked

- If present → data is sent (cache hit)

- If absent → data is fetched from RAM (cache miss)

- Fetched data is stored in cache for future requests

Cache Mapping Techniques

Mapping helps decide where data will be stored in the cache.

- Direct Mapping: Each block of RAM maps to a specific location in cache.

- Associative Mapping: Any block can go into any cache location.

- Set-Associative Mapping: Combination of direct and associative mapping.

Cache Replacement Policies

When the cache is full, older data is replaced using:

- FIFO (First-In-First-Out): Oldest data is removed first.

- LRU (Least Recently Used): Least recently accessed data is replaced.

- Random Replacement: Randomly selects a block to replace.

Advantages of Cache Memory

- Increases processing speed

- Reduces CPU idle time

- Decreases data access latency

- Improves system efficiency

Cache Memory vs Main Memory

| Feature | Cache Memory | Main Memory (RAM) |

|---|---|---|

| Speed | Very high | Moderate |

| Size | Small | Large |

| Cost | Expensive | Comparatively cheap |

| Location | On or near CPU | On motherboard |

| Volatility | Volatile | Volatile |

Real-Life Analogy

Think of cache memory like a teacher keeping frequently used books on the desk instead of fetching them from the library every time. It saves time and improves work efficiency.

Limitations of Cache Memory

- Limited storage

- High cost (uses SRAM)

- Cannot be expanded by the user

Conclusion

Cache memory plays a crucial role in modern computing. It reduces the mismatch between the fast speed of the processor and the slower main memory, ensuring smooth and efficient performance. Understanding its function is essential for anyone studying computer architecture.

FAQs

Q1. What is cache memory in a computer?

Cache memory is a small, fast memory between CPU and RAM that stores frequently used data and instructions.

Q2. Why is cache memory important?

It reduces access time and prevents the CPU from waiting for data from the slower main memory.

Q3. What is the difference between RAM and cache memory?

RAM is larger and slower, while cache is smaller but faster and closer to the CPU.

Q4. How does cache memory improve CPU performance?

It stores reusable data and instructions so the CPU doesn’t need to fetch them from RAM repeatedly.

Internal Link Suggestion:

External Reference

Intel Cache Memory Guide